The Numbers Don't Lie: Why Camera+AI Is Winning the Autonomous Vehicle Race

Recently, our CEO Jard van Ingen shared a striking data point on LinkedIn: vision-based autonomous driving technology—cameras paired with AI—is improving at 420%. This rate dramatically outpaces any competing approach.

Read the post here

But how do you even measure something like that? And what does a 420% improvement rate actually mean for the future of autonomous vehicles?

Those are fair questions, and they deserve a deeper dive. Let's delve deeper into our methodology to reveal how we arrived at these figures and, more importantly, to understand their implications for the future of transportation.

The Tale of Two Technologies

The autonomous vehicle industry faces a fundamental technical decision: LiDAR sensors for laser-precise measurements, or cameras powered by artificial intelligence.

LiDAR advocates point to the precision of laser measurements, direct distance readings, and hardware-based reliability. Camera+AI proponents argue that since roads were designed for human vision, autonomy requires solving computer vision.

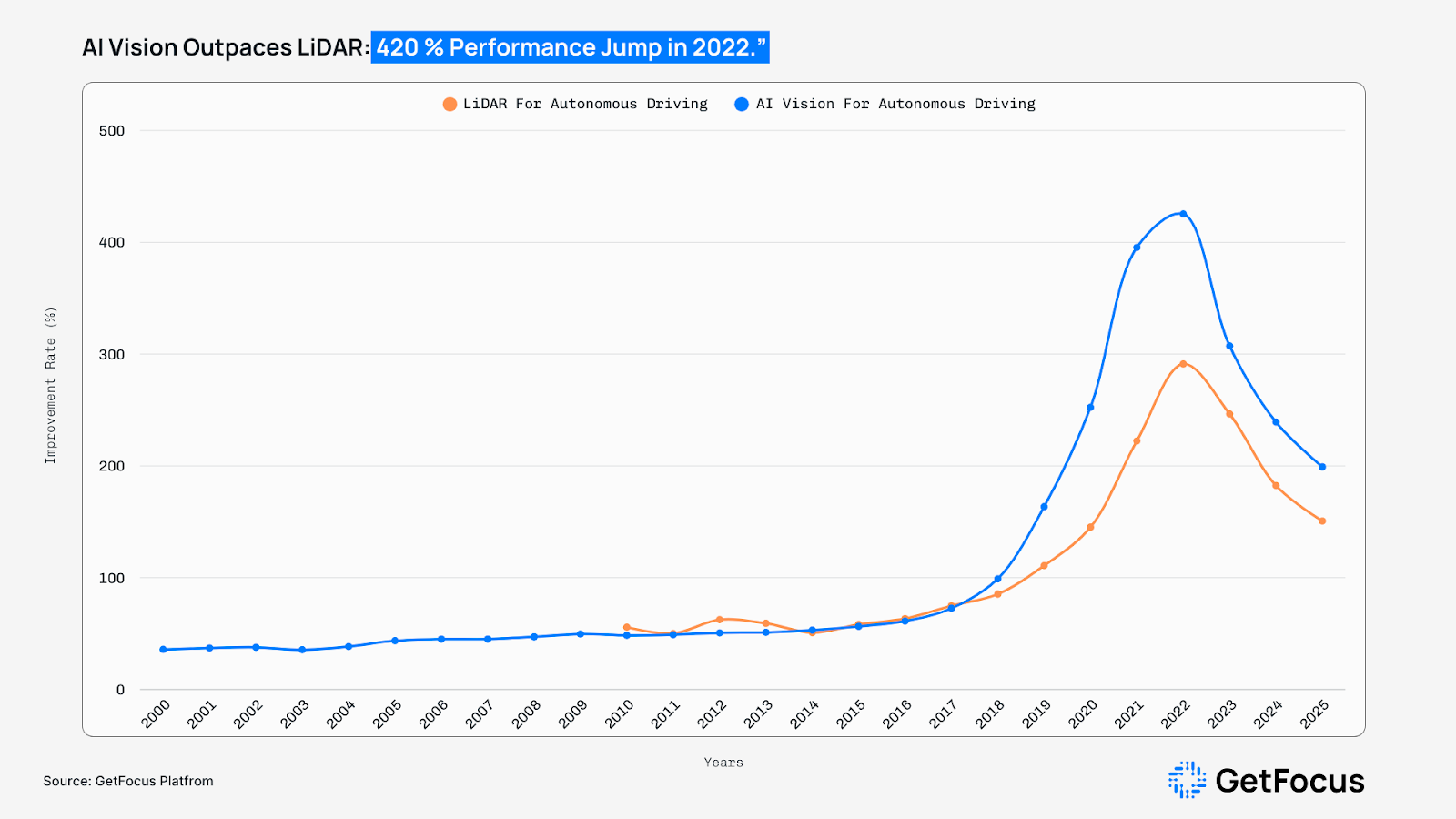

Our data shows which approach is winning based on improvement rates. The technology that improves fastest ultimately dominates the market.

How We Measure the Speed of Innovation

Our methodology, developed in partnership with MIT, focuses on a simple but powerful concept: the rate of improvement. Think of it like compound interest in a bank account—the technology with the fastest improvement rate inevitably pulls ahead, even if it starts behind.

We measure the improvement rate through two key factors hidden in patent data:

- Cycle Time: How quickly a technology takes inventive steps

- Knowledge Flow: How significant those steps are

Technologies that take big leaps quickly are on a winning trajectory. When we applied this analysis to autonomous vehicle sensors, the results were unequivocal.

Both technologies are advancing rapidly, but at different speeds. AI Vision's improvement rate has reached over 420%, while LiDAR technology is also progressing impressively at approximately 290%. However, this 130 percentage point difference in improvement velocity suggests that vision-based systems are pulling ahead in the race for autonomous vehicle dominance.

In the exponential world of technology development, the faster-improving solution typically becomes the long-term winner, even when both technologies are advancing at historically impressive rates.

Why Camera+AI Is Accelerating While LiDAR Crawls

The astronomical improvement rate of Camera+AI isn't random—it's powered by two of the most potent forces in modern technology.

First, there's Moore's Law. AI performance is directly tied to the computing power it runs on. For over 50 years, chips have gotten exponentially more powerful and cheaper every year. This constant increase in compute capacity serves as a catalyst for the advancement of AI.

Every new generation of chips makes the AI brain in autonomous vehicles smarter and more affordable.

Second, there's the speed of software. Here's where the fundamental difference becomes clear: LiDAR's progress is constrained by the physics of manufacturing—optics, lasers, and mechanical components that must be precisely engineered and physically produced. Camera+AI's progress is primarily a software problem.

What does that mean? When researchers develop breakthroughs in AI models—like the revolutionary Convolutional Neural Networks or Transformers—these advances can be distributed globally almost instantly through over-the-air updates. This creates a rapid feedback loop: more data from vehicles on the road trains better AI, which gets deployed on more powerful hardware, which generates more data, and the cycle accelerates.

The Tesla Case Study: Betting on the Rate of Change

This brings us to the most prominent real-world test of this theory: Tesla and Elon Musk's controversial declaration that "anyone relying on LiDAR is doomed."

“Lidar is a fool’s errand,” Elon Musk said. “Anyone relying on lidar is doomed. Doomed! [They are] expensive sensors that are unnecessary. It’s like having a whole bunch of expensive appendices. Like, one appendix is bad, well now you have a whole bunch of them, it’s ridiculous, you’ll see.”

Elon Musk, Tech Crunch 2019

At the time, most observers saw this as a contrarian gamble—maybe even reckless. Musk was betting against an industry consensus that favored the precision and reliability of laser-based sensing.

But from our perspective, the decision wasn't a gamble at all. It was an intuitive bet on the technology with the superior improvement rate. Musk chose the horse that was learning and accelerating faster.

By committing to a vision-only system, Tesla created something competitors couldn't match: the world's largest real-world training dataset. Every Tesla on the road became a data collection vehicle, feeding billions of miles of driving experience back into their AI systems. This massive scale advantage has accelerated their AI's learning curve at a pace that traditional automakers, with their smaller fleets and LiDAR dependencies, simply cannot match.

The recent milestone of a Tesla autonomously delivering itself to a customer—with no human in the car and no remote operators—serves as a powerful proof point of where this trajectory leads.

Today's Reality Check: Strengths and Weaknesses

While improvement rates predict the ultimate winner, it's worth understanding what each technology brings to the table right now. This snapshot reveals exactly which problems Camera+AI is solving at an exponential rate.

LiDAR's core strength lies in providing direct, geometrically precise 3D data through time-of-flight measurements. Cameras provide rich 2D contextual data—color, texture, text—that AI must use to infer 3D geometry.

This creates a detailed set of capabilities that we can compare directly:

The critical insight from our data is that Camera+AI's primary weaknesses—geometric ambiguity, weather sensitivity, and computational complexity—are fundamentally software and data problems. These are exactly the kinds of challenges that get solved at exponential rates.

LiDAR's primary weakness—high cost and manufacturing complexity—improves at a slower pace compared to Camera+AI’s improvement rate constrained by physical manufacturing processes.

What This Means for the Future

Based on our analysis of technological improvement rates, we see two clear trends emerging:

Camera+AI will become the foundational perception system. The combination of exponential improvement rates and rapidly falling compute costs positions Camera+AI as the dominant solution for mass-market autonomous driving. It's the only approach with a viable path to the low cost and high scalability required for widespread adoption.

LiDAR won't disappear—it will find a new role. As LiDAR costs continue their own steady decline (from $75,000 a decade ago to a projected sub-$200 by 2025), the technology will shift into a supporting role. For premium robotaxis and high-end vehicles where safety redundancy is paramount, manufacturers will likely add low-cost LiDAR as a backup sensor, providing deterministic geometric data to support the primary vision system.

The Signal Is Clear

The autonomous vehicle perception race follows established patterns in technological disruption. Success depends on improvement velocity, not current performance.

The 420% improvement rate we're measuring in Camera+AI represents a technology on an exponential trajectory. Our data analysis reveals which approach will dominate autonomous vehicle perception.

More articles like this

A look into the future: BEV or FCEV—What AI has to say

AI-driven insights reveal why LFP batteries paired with graphene-coated silicon anodes are set to dominate EVs. This report outlines the breakthrough in energy density, Europe’s lag in adoption, and what automakers must do now to stay competitive in the global electric vehicle race.

Odin Platform Update – New Features & Improvements

We’re thrilled to introduce a range of new features and improvements on the Odin platform, designed to make your research and innovation process even more seamless. From chatting with up to 1,000 patents at once, to clickable patent links, complete patent references, and enhanced control over patent chats—this update is packed with powerful tools. Plus, our new Tracking Workflows feature helps you stay on top of critical changes in your patent sets effortlessly. Read the full post to explore how these updates can supercharge your work!

The Complex Challenge of Material Sourcing in Luxury Goods

The luxury goods sector, known for its stringent quality standards and opulent appeal, faces a daunting challenge in aligning with environmental sustainability, particularly in meeting Scope 2 and 3 emissions criteria. These scopes, part of the Greenhouse Gas (GHG) Protocol, extend beyond direct emissions (Scope 1) to include indirect emissions from purchased energy (Scope 2) and all other indirect emissions across a company's value chain (Scope 3).

Reach out to us!

Start a conversation. We are happy to help.

.svg)